Describe Apache HBase. Differentiate HDFS with HBase.

APACHE HBASE

HBase is a column-oriented distributed database developed on top of the Hadoop file system. It is an open-source project that may be scaled horizontally. HBase is a data architecture comparable to Google's big table that is meant to allow fast random access to massive volumes of structured data. It makes use of the Hadoop File System's fault tolerance (HDFS).

Apache HBase is a Hadoop -based distributed, scalable NoSqlb Big data storage.HBase is capable of hosting very large tables-billions of rows and millions of columns-and of providing real-time, random read/write access to Hadoop data. HBase is a multi-column data store inspired by Google Bigtable, a database interface to Google's proprietary File System. HBase adds Bigtable-like features to read/write access to Hadoop-compatible file systems like MapR XD. HBase scales linearly over very large datasets and allows for the easy combination of data sources with heterogeneous topologies and schemas.

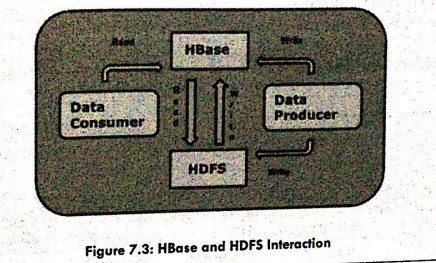

HBase is a Hadoop ecosystem component that allows random real-time read/write access to data in the Hadoop File System. Data may be stored in HDFS directly or through HBase. Using HBase, the data consumer reads/accesses the data in HDFS at random. HBase is a database that sits on top of the Hadoop File System for reading and writing access.

Differences between HDFS and HBase.

HDFS

- HDFS is a distributed file system suitable for storing large files.

- HDFS does not support fast individual record lookups.

- It provides high latency batch processing; no concept of batch processing.

- It provides only sequential access to data.

HBase

- HBase is a database built on top of the HDFS.

- HBase provides fast lookups for larger tables.

- It provides low latency access to single rows from billions of records (Random access).

- HBase internally uses Hash tables and provides random .

Comments

Post a Comment