Explain how tokenization and stemming is done in text mining.

Tokenization

Tokenization is defined as the process of segmenting a text or text into tokens by the white space or punctuation marks. It is able to apply tokenization to the source codes in C, C++, and Java, as well as the texts which are written in a natural language. However, the scope is restricted to only text in this study, in spite of the possibility. The morphological analysis is required for tokenizing texts which are written in oriental languages: Chinese, Japanese, and Korean. So, here, omitting the morphological analysis, we explain the process of tokenizing texts which are written in English.

Optional for writing point of view if asked explain process of tokenization then , only write all this that is the bracket

((

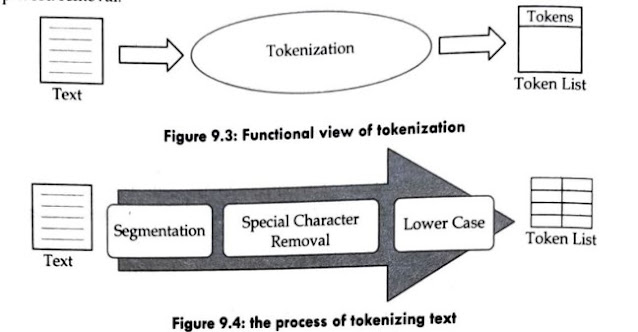

The functional view of tokenization is illustrated in figure 9.3. A text is given as the input, and the list of tokens is generated as the output in the process. The text is segmented into tokens by the white space or punctuation marks. As the subsequent processing, the words which include characters or numerical values are removed, and the tokens are changed into their lowercase characters. The list of tokens becomes the input of the next steps of text indexing: the stemming or the stop-word removal.

The process of tokenizing a text is shown in figure 9.4. The given text is partitioned into tokens by the white space, punctuation marks, and special characters. The words which include one or some of the special characters, such as "16%," are removed. The first character of each sentence is given as the uppercase character, so it should be changed into the lowercase. Redundant words should be removed after the steps of text indexing. ))

Figure 9.5: The example of tokenizing text

The example of tokenizing a text is illustrated in figure 9.5. In the example, the text consists of two sentences. Text is segmented into tokens by the white space, as illustrated on the right side in figure 9.3. The first word of the two sentences, "Text," is converted into "text," by the third tokenization step. In the example, all of the words have no special character, so the second step is passed.

2) Stemming

Stemming refers to the process of mapping each token that is generated from the previous step into its own root form. The stemming rules which are the association rules of tokens with their own root form are required for implementing it. Stemming is usually applicable to nouns, verbs, and adjectives, as shown in figure 9.6. The list of root forms is generated as the output of this step.

In the stemming, the nouns which are given in their plural form are converted into their singular form, as shown in figure 9.6. In order to convert it into its singular form, the character, “s," is removed from the noun in the regular cases. However, we need to consider some exceptional cases in the stemming process; for some nouns which end with the character, "s," such as "process," the postfix, "es," should be removed instead of "s," and the plural and singular forms are completely different from each other as the case of words, "child" and "children." Before stemming nouns, we need to classify words into nouns or not by the POS (Position of Speech) tagging. Here, we use the association rules of each noun with its own plural forms for implementing the stemming.

Comments

Post a Comment